The recent release of ChatGPT by OpenAI has reignited the debate about the uses of AI and what this will mean for humanity. Is AI dangerous? Will ChatGPT and technologies like it take our jobs? How do we design AI so it can help humanity? These questions, and many more, are currently flying about all over the media and the internet. With the technology still in its infancy, there are no clear answers yet, which makes asking these questions all the more important as they can help guide AI, including social robots, on a more responsible and beneficial path.

The concerns people have about AI are not unfounded. Like any technology, AI can be used with malicious intent and it is powerful enough to cause serious harm, especially as it is now deeply ingrained in industries that are essential for a functioning society, including finance, banking, manufacturing, education and healthcare. With such widespread influence, and as AI grows in sophistication and power, it is no surprise that people are worried.

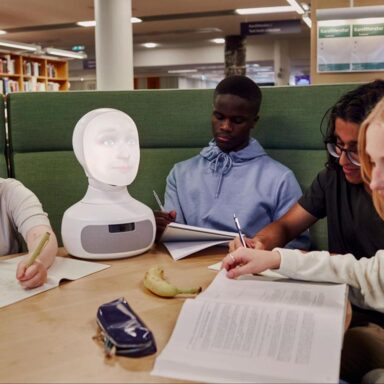

Some of the fears surrounding AI (and by extension AI powered robots like Furhat) are largely exaggerated and dystopian – it is not inevitable that AI will take over the world, kill us all and fully replace humanity. However, as political philosopher and Harvard professor Michael Sandel says, there are some major areas of concern regarding AI. These are privacy and surveillance, and bias and discrimination, as well as the impact these can have on human judgment and decision making. Being aware and conscious of the perils is the first step to prevent them and it’s in everyone’s interest to do so.

Privacy and Surveillance

With access to big data and records of all kinds, such as medical and financial records, and the power to assess big data, AI poses a risk to privacy if not managed and regulated carefully. Consent and transparency about what data is being collected, how it’s being used, where it’s going and who is seeing it are a must if AI is to benefit humanity. Otherwise, AI can be used to obtain very detailed data about people in order to manipulate, deceive and control. Social media platforms already use AI in this way and it has increased the polarization and isolation that has come to define today’s society.

Social robots like Furhat do not yet form part of the data-gathering machine that is social media, but they are social interfaces that humans interact with and therefore need to collect data in order to function efficiently and provide high quality interactions. This means privacy concerns are just as important for social robots as they are for other kinds of social interfaces powered by AI.

The good news is that social robots and new AI tools do not have to become new agents of surveillance capitalism. Data protection policies can be designed into them to prevent this kind of abuse. It’s up to us. At the end of the day, AI does what it is programmed by humans to do.

Bias and Discrimination

One of the arguments in favor of AI is that it can reduce bias and discrimination in different processes by removing human subjectivity from the equation. AI can do this in certain circumstances by removing elements of human prejudice like in the example below, but what this argument forgets is that AI is programmed by humans and the datasets fed to AI are made by humans. This means that biases can be programmed and fed into AI either consciously or unconsciously.

Algorithmic decision making and machine learning are of particular concern here because as AI becomes more advanced and its dataset grows as it learns, it becomes more difficult for humans (even those who programmed the AI) to understand what patterns the AI is identifying and how it’s interpreting those patterns to make decisions.

ChatGPT, for example, reinserts the very text it generates into training its data models. This means that there is a risk that AI can get stuck in a closed loop that reinforces a particular logic or outcome. This is particularly scary if we think about the AI systems used in military drones to identify targets or AI software in healthcare used to decide who will receive a donor organ. It’s easy to see how this dilutes human judgment and decision-making power.

This all paints a rather bleak picture of AI, but there is a bright side. People who work with AI are just as concerned about these issues (if not more so) as the general public. Research is ongoing on how to make AI processes more transparent. Efforts are underway to build “explainable AI” or “algorithmic accountability reporting” to ensure that we can retain oversight and agency over AI. One can imagine a social robot being particularly well suited for this as it could verbally walk us through its reasoning when asked or prompted.

Another solution that has been proposed is to make AI more human and not less by injecting greater empathy and moral reasoning into its processes so its decisions are not purely based on pattern identification and predictive analytics. This is where social robots will shine. They can not only be programmed with empathy, they can also express it.

The Potential

Exploring the ethical concerns of AI is really important to ensure that we continue developing the technology as responsibly as possible, but it can end up in a situation where we become overly focused on the negative. The reality is that AI is a very valuable technology that has huge potential for good.

In healthcare, for example, AI could help save thousands of lives by giving doctors access to all the medical knowledge available about a particular disease, including information about all past cases. This is where you may ask yourself: If AI has all this medical knowledge, will we even need human doctors?

The answer is yes. Medical AI is not being developed to make doctors redundant. It is intended to be a supportive tool that can help doctors make more informed decisions so they can provide higher quality care with better outcomes. AI can also help medical personnel save countless hours by handling the administration and paperwork, thereby allowing human staff to focus on what matters most – patient care.

In education, AI could help millions of students around the world complete secondary education by personalizing courses for the needs of each individual student. AI can also fill existing teaching and learning gaps in schools to ensure the overall quality of education is high no matter where you live.

When it comes to tackling global problems like climate change, AI’s ability to quickly analyze and interpret vast amounts of complex data can help improve conservation and emission reduction efforts, as well as determine the environmental impact of different projects.

AI can even help accelerate research and scientific discoveries. DeepMind’s project AlphaFold, for instance, is using AI to do the notoriously complex job of accurately predicting the shape of proteins based on their genetic composition. This is revolutionary when it comes to finding treatments for disease without needing long and costly experimentation. We already saw the value of using AI in this way during the COVID-19 pandemic. AI modeling helped researchers develop vaccines and treatments at record speeds. And this just scratches the surface of what AI can do for us.

Like every new thing, AI is scary at first. Then comes the rationalization, the vision for the potential and the willingness to experiment. That’s how we function as humans. When the first car came out in the early 1900s, people were initially very skeptical and hostile to the idea of replacing horses, but eventually automobiles were adopted en masse, leading to the transformation of transport and economies around the world.

With AI, the potential for positive impact is immense. AI can improve efficiency and bring down costs in nearly every field by supporting people with time-consuming technical and administrative tasks, giving them more time to focus on more important responsibilities like decision making and execution. Furthermore, far from taking jobs away from people, AI is going to create new jobs that are fulfilling and valuable. To paraphrase Joseph Fuller, founder of the Harvard research group The Future of Work, we are going to see humans in roles where they can apply their judgment, expertise, creativity and empathy to enhance the valuable information that AI provides.

Conclusion

As we have seen, AI’s potential for harm is counterbalanced by its huge potential for good. Fear should not stop us from developing the technology further so it can really help people. We should not immediately frown at the release of something like ChatGPT. Instead, we should ask ourselves, how can this help? What potential areas of concern can we identify and how can we address them? Fear should not stop us from moving forward, it should make us proceed with caution so we can prevent the most harm and create the most good.