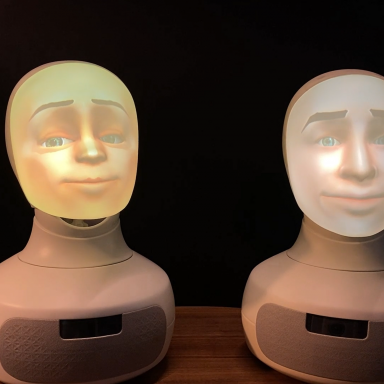

This is the second of two posts talking about the key features of Furhat’s face. In the previous post we discussed one of the unique features of the Furhat robot, namely how it is possible to create and customize faces and characters. In this post, we will look at another defining feature: the unmatched facial expressiveness that comes with back-projection, and we discuss new tools and technologies that take this expressiveness to the next level.

If you are familiar with the Furhat robot, you may know that it comes with a Software Development Kit (SDK) for developing advanced human-robot interaction applications, referred to as ‘skills’. Skills offer a comprehensive way to author not only the spoken part of the interaction (speech in/out) but also the non-verbal parts, allowing the robot to react to actions (e.g. visual attention or a smile from the user) and also display facial gestures. The SDK includes several pre-defined gestures, and it allows developers to define custom gestures through code. This is flexible, but it can be tedious and difficult to define new convincing gestures procedurally.

So, how can we make the creation of high quality animation easier, faster and also accessible to non-animators? The solution is motion capture technology: driving the robot’s facial expression, eye gaze and head motion from the actions of a real human face. Let’s have a look at how it works (hint: it doesn’t require a Hollywood studio – a decent smartphone is enough!).

In the previous post we introduced Furhat’s new face engine — FaceCore — that greatly increases the fidelity, customizability and expressive capabilities of the Furhat robot. The main task of the face engine is to render the face of the robot, and animate it in real time under the control of the Furhat system. The face engine is built on industry standard game engine technology, which means that it runs just as well on the physical robot as in the simulation environment Virtual Furhat on Windows, Mac or Linux.

Face motion is controlled by animation parameters known as blend shapes. These work much like the strings of a marionette -- when a string is pulled, a corresponding action is performed, e.g. the jaw opens or an eye closes.

The standard Furhat animation parameter set, which was available also before the introduction of FaceCore, consists of 46 parameters. These can be grouped into expressions (affecting the whole face, e.g., such as “Angry”, “Sad” etc.), modifiers (affecting individual parts, e.g. “browUp”, “blinkLeft” etc.) and phoneme shapes (for speech animation, e.g. “Ah”, etc.). FaceCore incorporates a second set of animation parameters, known as ARKit parameters. These were introduced by Apple with the launch of the iPhone X. What makes ARKit facial animation parameters particularly interesting is that they can be reliably estimated in real time using the front facing camera of an iPhone X (or later), thereby providing a cost effective and accurate solution for facial motion capture. The introduction of ARKit has had a significant impact on facial animation workflows recently and is used extensively e.g. in the computer game industry — and now also in social robotics!

The ARKit parameter set consists of 52 low-level blendshapes that correspond to individual facial muscle activations for different parts of the face (eyes, jaw, mouth, cheeks, nose and tongue). In addition, eye gaze and head rotations are tracked, making it possible to capture all head- and face behaviour needed for the Furhat robot. In FaceCore, ARKit parameters can be used interchangeably with the standard set (described above) or in combination, in which case they have an additive effect.

The workflow required to capture a facial performance and turning this into a gesture that can be used as part of an interactive skill is straight-forward:

1. A facial performance is captured using an iPhone X (or later model). Capture is done using a third-party app (Live link face from Epic Games) which saves face motion (52 blend shapes + 3 DoF head rotations @ 60 fps) to a CSV file. It also saves a video including sound.

2. The captured data is transferred from the phone to a computer and imported to a custom Gesture Capture tool. This tool makes it possible to do certain modifications/simplifications to the recording and optionally speed up/slow down the gesture, or change its amplitude, before exporting it as a JSON file.

3. The last step is to invoke gestures from within a skill on the robot. When a skill is built in the SDK, the exported JSON files are placed in the resource folder of the skill, which makes them accessible for invocation from the application logic.

The ability to animate the robot’s face and head via ARKit parameters opens up a host of possibilities to developers, researchers and users of the Furhat robot.

Recording of gestures and prompts and using these as building blocks in a robot skill makes it possible to build highly expressive interactive content. It is also possible to record entire performances, e.g., of speech or singing. But this is only the beginning. The ability to capture and synthesize facial performances on a robotics platform is, in our opinion, a true research enabler, because when these capabilities are exposed in the SDK, they open up a whole range of interesting possibilities for advanced users to develop custom experiments, e.g. with live streaming of face data in order to build advanced wizard-of-oz interaction setups or even telepresence solutions. The capture-synthesis link also lends itself to a form of experimentation where signals are manipulated, possibly in real time, in order to investigate the effect of social cues, a paradigm known as transformed social interaction. An example could be a hypothetical experiment studying the effect of eye contact in face-to-face interaction, where all face parameters are copied from the user except for the eye gaze, which is manipulated in a controlled fashion.

Finally, since face animation data can be captured from users in high quality and in large quantities, data driven synthesis facilitated by different statistical and machine learning approaches are also within reach.

So to sum up: the Furhat face just got more expressive, and we put the researchers, developers and innovators of the Furhat community in control. Now we can’t wait to see how they will express themselves!

If you missed the first blog in the FaceCore series, find it here.

Interested in learning more about FaceCore and the 2.0 Software & SDK Release?

Visit the release announcement page to dive into the new features